This is one of those ‘nice to know in case I ever need it’ kind of topics for me. VRF stands for virtual routing and forwarding, and it is a technique to divide the routing table on a router into multiple virtual tables.

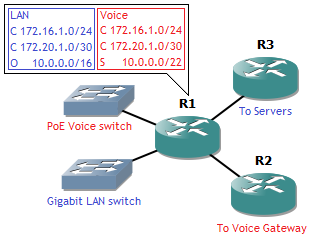

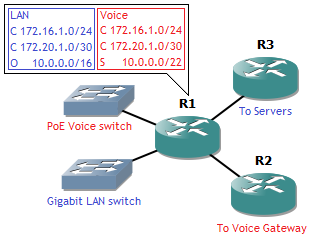

How does this work, and more importantly, why would you need it? Well, it is useful to provide complete separation of networks on the same router, so this router can be shared between different services, departments or clients. For example the following network:

Say you have to design an office, with desks and IP Phones. You already have one gigabit switch on stock, and the budget allows a 100 Mbps PoE switch. The manager insists on gigabit connections towards the desktops, and he insists that the voice network and data network are completely separated, with voice using static routes and the LAN using dynamic routing. However, you only get one router for the office itself. (This wouldn’t be good redundant design, that aside.) A good choice here is to connect all IP Phones to the PoE switch and all desktops to the gigabit switch, and connect both to the router. But since complete separation is needed, you can’t have any routing between the two. This is where VRF comes into play.

I’ll explain while showing the configuration. First, define VRF instances. Since there needs to be separation between IP Phones and desktops here, I’ll make two instances: VOICE and LAN.

router(config)#ip vrf VOICE

router(config-vrf)#exit

router(config)#ip vrf LAN

router(config-vrf)#exit

This separates the router into two, logical routers. Actually three instances, the default instance is still there, but it’s best not to use that one since it gets confusing. Next is assigning interfaces to their VRF. Let’s assume the PoE Voice switch is connected on Gigabit 0/0, and the gigabit switch on Gigabit 0/1. On Gigabit 0/2, there’s an uplink to the server subnets, and on Gigabit 0/3, there’s an uplink to the voice gateway.

R1(config)#interface g0/0

R1(config-if)#ip vrf forwarding VOICE

R1(config-if)#ip address 172.16.1.1 255.255.255.0

R1(config-if)#no shutdown

R1(config-if)#exit

R1(config)#interface g0/1

R1(config-if)#ip vrf forwarding LAN

R1(config-if)#ip address 172.16.1.1 255.255.255.0

R1(config-if)#no shutdown

R1(config-if)#exit

R1(config)#interface g0/2

R1(config-if)#ip vrf forwarding LAN

R1(config-if)#ip address 172.20.1.1 255.255.255.252

R1(config-if)#no shutdown

R1(config-if)#exit

R1(config)#interface g0/3

R1(config-if)#ip vrf forwarding VOICE

R1(config-if)#ip address 172.20.1.1 255.255.255.252

R1(config-if)#no shutdown

R1(config-if)#exit

You may have noticed something odd here: overlapping IP addresses and subnets on the interfaces. This is what VRF is all about: you can consider VOICE and LAN as two different routers. And one router will not complain if another router have the same IP address, unless they’re directly connected. But since VOICE and LAN do not share interfaces, as odd as this may sound, they are not connected to each other.

To further demonstrate the point, let’s add a static route to the VOICE router, and set up OSPF on the LAN router:

R1(config)#ip route vrf VOICE 10.0.0.0 255.255.252.0 172.20.1.2

R1(config)#router ospf 1 vrf LAN

R1(config-router)#network 0.0.0.0 255.255.255.255 area 0

R1(config-router)#passive-interface g0/0

%Interface specified does not belong to this process

R1(config-router)#passive-interface g0/1

R1(config-router)#exit

OSPF routing is also set up on router R3, towards the server subnets. Note that R2 and R3 do not need to have VRF configured. To them, they are connecting to the router VOICE and LAN, respectively. Also, when in the OSPF configuration context, I can’t put the Gigabit 0/0 interface in passive, as it’s not part of the LAN router.

And now the final point that clarifies the VRF concept: the routing tables.

R1#show ip route vrf VOICE

Routing Table: VOICE

Codes: C – connected, S – static, R – RIP, M – mobile, B – BGP

D – EIGRP, EX – EIGRP external, O – OSPF, IA – OSPF inter area

N1 – OSPF NSSA external type 1, N2 – OSPF NSSA external type 2

E1 – OSPF external type 1, E2 – OSPF external type 2

i – IS-IS, su – IS-IS summary, L1 – IS-IS level-1, L2 – IS-IS level-2

ia – IS-IS inter area, * – candidate default, U – per-user static route

o – ODR, P – periodic downloaded static route

Gateway of last resort is not set

172.16.0.0/24 is subnetted, 1 subnets

C 172.16.1.0 is directly connected, FastEthernet0/0

172.20.0.0/30 is subnetted, 1 subnets

C 172.20.1.0 is directly connected, FastEthernet2/0

10.0.0.0/22 is subnetted, 1 subnets

S 10.0.0.0 [1/0] via 172.20.1.2

R1#show ip route vrf LAN

Routing Table: LAN

Codes: C – connected, S – static, R – RIP, M – mobile, B – BGP

D – EIGRP, EX – EIGRP external, O – OSPF, IA – OSPF inter area

N1 – OSPF NSSA external type 1, N2 – OSPF NSSA external type 2

E1 – OSPF external type 1, E2 – OSPF external type 2

i – IS-IS, su – IS-IS summary, L1 – IS-IS level-1, L2 – IS-IS level-2

ia – IS-IS inter area, * – candidate default, U – per-user static route

o – ODR, P – periodic downloaded static route

Gateway of last resort is not set

172.16.0.0/24 is subnetted, 1 subnets

C 172.16.1.0 is directly connected, FastEthernet0/1

172.20.0.0/30 is subnetted, 1 subnets

C 172.20.1.0 is directly connected, FastEthernet1/0

10.0.0.0/16 is subnetted, 1 subnets

O 10.0.0.0 [110/2] via 172.20.1.2, 00:04:04, FastEthernet1/0

Different (logical) routers, different routing tables. So by using VRF, you can have a complete separation of your network, overlapping IP space, and increase security too!