Disclaimer: the logs are taken from a production network but the values (VLAN ID, names) are randomized.

Recently, I encountered an issue on a Campus LAN while performing routine checks: spanning tree seemed to undergo regular changes.

The LAN in question uses five VLANs and RPVST+, a Cisco-only LAN. At first sight there was no issue:

On an access switch, one Root port towards the Root bridge, a few Designated ports (note the P2P Edge) where end devices connect, and an Alternative port in blocking with a peer-to-peer neighborship, which means BPDUs are received on this link.

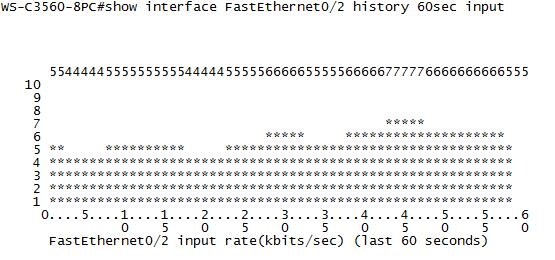

There is a command that allows you to see more detail: ‘show spanning-tree detail’. However, the output from this command is overwhelming so it’s best to apply filters on it. After some experimenting, filtering on the keywords ‘from’,’executing’ and ‘changes’ seems to give the desired output:

This gives a clear indication of something happening in the LAN: VLAN 302 has had a spanning-tree event less than 2 hours ago. Compared to most other VLANs who did not change for almost a year, this means something changed recently. After checking the interface on which the event happened, I found a port towards a desk which did not have the BPDU Guard enabled, just Root Guard. It was revealed that someone regularly plugged in a switch to have more ports, which talked spanning-tree but with a default priority, not claiming root. As such, Root Guard was not triggered, but the third-party switch did participate in spanning-tree from time to time.

Also, as you notice in the image, VLAN 304 seemed to have had a recent event to, on the Alternative Port. After logging in on the next switch I got the following output:

Good part: we have a next interface to track. Bad news: it’s a stack port? Since it’s a stack of 3750 series switches, it has stack ports in use, but the switches should act as one logical unit in regards to management and spanning-tree, right? Well, this is true, but each switch still makes the spanning-tree calculation by itself, which means that it can receive a spanning-tree update of another switch in the stack through the stack port.

Okay, but can you still trace this? You would have to be able to look in another switch in the stack… And as it turns out, you can:

After checking which stack port connects to which switch in the CLI, you can hop to the next one with the ‘session’ command and return to the master switch simply by typing ‘exit’. On the stack member, again doing the ‘show spanning tree detail’ command shows the local port on which the most recent event happened. And again the same thing: no BPDU Guard enabled here.