This article is not really written with knowledge usable for a production network in mind. It’s more of an “I have not failed. I’ve just found 10,000 ways that won’t work.” kind of article.

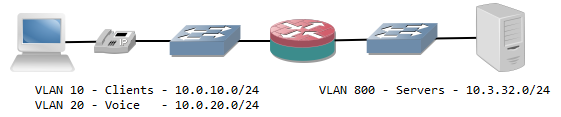

I’m currently in a mailing group with fellow network engineers who are setting up GRE tunnels to each others home networks over the public internet. Over those networks we speak (external) BGP towards each other and each engineer announces his own private address range. With around 10 engineers so far and a partial mesh of tunnels, it gives a useful topology to troubleshoot and experiment with. Just like the real internet, you don’t know what happens day-to-day, neighborships may go down or suddenly new ones are added, and other next-hops may become more interesting for some routes suddenly.

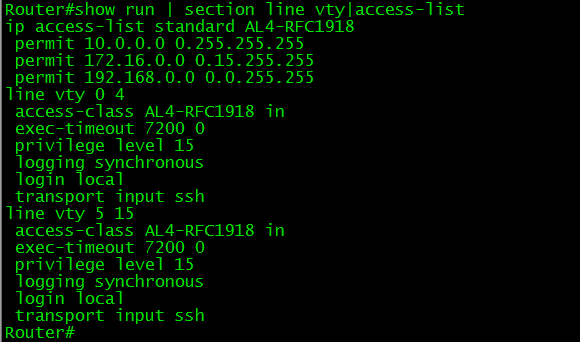

But of course it requires a device at home capable of both GRE and BGP. A Cisco router will do, as will Linux with Quagga and many other industrial routers. But the only device I currently have running 24/7 is my WS-C3560-8PC switch. Although it has an IP Services IOS, is already routing and can do GRE and BGP, it doesn’t do NAT. Easy enough: allow GRE through on the router that does the NAT in the home network. Turns out the old DD-WRT version I have on my current router doesn’t support it. Sure I can replace it but it would cost me a new router and it would not be a challenge.

Solution: give the switch a direct public IP address and do the tunnels from there. After all, the internal IP addresses are encapsulated in GRE for transport so NAT is required for them. Since the switch already has a default route towards the router, set up host routes (a /32) per remote GRE endpoint. However, this still introduces asymmetric routing: the provider subnet is a connected subnet for the switch, so incoming traffic will go through the router and outgoing directly from the switch to the internet without NAT. Of course that will not work.

So yet another problem to work around. This can be solved for a large part using Policy-Based Routing (PBR): on the client VLAN interface, redirect all traffic not meant for a private range towards the router. But again, this has complications: the routing table does not reflect the actual routing being done, more administrative overhead, and all packets originated from the local switch will still follow the default (the 3560 switch does not support PBR for locally generated packets).

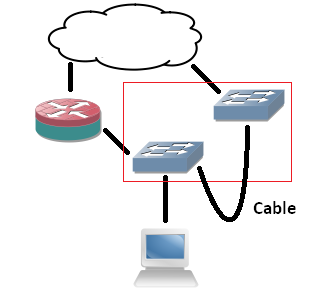

Next idea: it would be nice to have an extra device that can do GRE and BGP directly towards the internet and my switch can route private range packets towards it. But the constraint is no new device. So that brings me to VRFs: split the current 3560 switch in two: one routing table for the internal routing (vrf MAIN), one for the GRE tunnels (vrf BGP). However, to connect the two VRFs on the same physical device I would need to loop a cable from one switchport to another, and I only have 8 ports. The rest would work out fine: point private ranges from a VLAN interface in one VRF to a next-hop VLAN interface over that cable in another VRF. That second VRF can have a default route towards the internet and set up GRE tunnels. The two VRFs would share one subnet.

Since I don’t want to deal with that extra cable, would it be possible to route between VRFs internally? I’ve tried similar actions before, but those required a route-map and a physical incoming interface. I might as well use PBR if I go that way. Internal interfaces for routing between VRFs exist on ASR series, but not my simple 8-port 3560. But what if I replace the cable with tunnel interfaces? Is it possible to put both endpoints in different VRFs? Yes, the 15.0(2) IOS supports it!

The tunnel interfaces have two commands that are useful for this:

- vrf definition : just like on any other layer 3 interface, it specifies the routing table of the packets in the interface (in the tunnel).

- tunnel vrf : specifies the underlying VRF from which the packets will be sent, after GRE encapsulation.

With these two commands, it’s possible to have tunnels in one VRF transporting packets for another VRF. The concept is vaguely similar to MPLS-VPN, where your intermediate (provider) routers only have one routing table which is used to transport packets towards routers that have the VRF-awareness (provider-edge).

interface Vlan2

ip address 192.168.2.1 255.255.255.0

interface Vlan3

ip address 192.168.3.1 255.255.255.0

interface Tunnel75

vrf forwarding MAIN

ip address 192.168.7.5 255.255.255.252

tunnel source Vlan2

tunnel destination 192.168.3.1

interface Tunnel76

vrf forwarding BGP

ip address 192.168.7.6 255.255.255.252

tunnel source Vlan3

tunnel destination 192.168.2.1

So I configure two tunnel interfaces, both in the main routing table. Source and destination are two IP addresses locally configured on the router. I chose VLAN interface, loopbacks will likely work as well. Inside the tunnels, one is set to the first VRF, the other to the second. One of the VRFs may be shared with the main (outside tunnels) routing table, but it’s not a requirement. Configure both tunnel interfaces as two sides of a point-to-point connection and they come up. Ping works, and even MTU 1500 works over the tunnels, despite the show interface command showing an MTU of only 1476!

Next, I set up BGP to be VRF-aware. Logically, there are two ‘routers’, one of which is the endpoint for the GRE tunnels, and another one which connects to it behind it for internal routing. Normally if it were two physical routers, I would set up internal BGP between them since I’m already using that protocol. But there’s no difference here: you can make the VRFs speak BGP to each other using one single configuration.

router bgp 65000

address-family ipv4 vrf MAIN

neighbor 192.168.7.6 remote-as 65000

network 192.168.0.0 mask 255.255.248.0

neighbor 192.168.7.6 activate

exit-address-family

address-family ipv4 vrf BGP

bgp router-id 192.168.7.6

neighbor 192.168.7.5 remote-as 65000

neighbor 192.168.7.5 activate

exit-address-family

A few points did surface: you need to specify the neighbors (the IP addresses of the local device in the different VRFs) under the correct address families. You also need to specify a route distinguisher under the VRF as it is required for VRF-aware BGP. And maybe the most ironic: you need a bgp router-id set inside the VRF address-family so it differs from the other VRF (the highest interface IP address by default), otherwise the two ‘BGP peers’ will notice the duplicate router-id and it will not work. But after all of that, BGP comes up and routes are exchanged between the two VRFs! For the GRE tunnels towards the internet, the tunnel vrf command is required in the GRE tunnels so they use the correct routing table for routing over the internet.

So what makes this not production-worthy? The software-switching.

The ASIC can only do a set number of actions in a certain sequence without punting towards the switch CPU. Doing a layer 2 CAM table lookup or a layer 3 RIB lookup is one thing. But receiving a packet, have the RIB pointing it to a GRE tunnel, encapsulate, decapsulate and RIB lookup of another VRF is too much. It follows the expected steps in the code accordingly, the IOS software does not ‘see’ what the point is and does not take shortcuts. GRE headers are actually calculated for each packet traversing the ‘internal tunnel’ link. I’ve done a stress test and the CPU would max out at 100% at… 700 kBps, about 5,6 Mbps. So while this is a very interesting configuration and it gives an ideal situation to learn more, it’s just lab stuff.

So that’s the lesson, as stated in the beginning: how not to do it. Can you route between VRFs internally on a Cisco switch or router (not including ASR series)? Yes. Would you want to do it? No!