On to a bigger platform: the 6500 series, Cisco’s flagship Campus LAN switch. Unlike the previously discussed platform, the 6500 series uses the older Weighted Round Robin (WRR) queueing mechanism, and uses CoS internally to put packets in queues.

Queues and thresholds

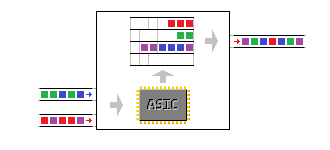

The capabilities per port also differ per line card and unlike the 3560/3750 series, it uses multiple ASIC per line card.

Switch#show interfaces GigabitEthernet 1/2/1 capabilities | include tx|rx|ASIC

Flowcontrol: rx-(off,on,desired),tx-(off,on,desired)

QOS scheduling: rx-(1q8t), tx-(1p3q8t)

QOS queueing mode: rx-(cos), tx-(cos)

Ports-in-ASIC (Sub-port ASIC) : 1-24 (1-12)

The above output is of a WS-X6748-GE-TX line card. The ‘1p3q8t’ for egress (tx) means one fixed priority queue and three normal queues, each with eight thresholds. The fixed priority queue cannot be changed to a normal queue: if a packet is in the queue, it will be transmitted next.

The ingress ‘1q8t’ means there is one ingress queue with eight thresholds. Unlike the 3560/3750, there is some oversubscription on the line card. It has two ASICs, one per 24 ports (the line card is 48 ports total). Each of these ASICs has a 20 Gbps connection to the 6500 backplane. If all 24 gigabit ports together on that part of the line card start receiving more than 20 Gbps of traffic, the ASIC and backplane connection will not be able to handle all the traffic. Granted, this is a rare event: 24 Gbps maximum throughput on a 20 Gbps capable ASIC is an oversubscription of 1.2 to 1. But in case this happens, the different thresholds can help decide which traffic to drop. However, to drop on ingress, the decision must be made on existing markings. The ASIC does classification and remarking, and the ingress queue is before the ASIC. This is not a problem usually, since classification and marking is best done at the access layer and 6500s are best used for distribution and core layer.

Switch#show interfaces TengigabitEthernet 1/9/1 capabilities | include tx|rx|ASIC

Flowcontrol: rx-(off,on),tx-(off,on)

QOS scheduling: rx-(1p7q2t), tx-(1p7q4t)

QOS queueing mode: rx-(cos,dscp), tx-(cos,dscp)

Ports-in-ASIC (Sub-port ASIC) : 1-8 (1-4)

The WS-X6716-10GE, a 10 GE line card, has different queues, especially for ingress. This line card has a high oversubscription of 4:1 and one ASIC per eight ports, for a total of two ASIC for the 16-port line card. This means that, while eight ports can deliver up to 80 Gbps, the ASIC and backplane connection behind it are still just 20 Gbps. The ASIC is much more likely to get saturated, so ingress queueing becomes important here. The fixed priority queue allows some traffic to be handled by the ASIC in low latency, even when saturated.

I’m only going to explain these two line cards, the rest is similar. A full list with details per line card can be found here. The logic is similar to the 3560/3750 platform: configure the buffers and the thresholds, but this time for both ingress and egress. First the ingress queue on the gigabit interface. The ingress queue has no buffer sizing command, as this line card has only one ingress queue.

Switch(config)#interface Gi1/2/1

Switch(config-if)#rcv-queue threshold 1 65 70 75 80 85 90 95 100

Warning: rcv thresholds will not be applied in hardware.

To modify rcv thresholds in hardware, all of the interfaces below

must be put into ‘trust cos’ state:

Gi1/2/1 Gi1/2/2 Gi1/2/3 Gi1/2/4 Gi1/2/5 Gi1/2/6 Gi1/2/7 Gi1/2/8 Gi1/2/9 Gi1/2/10 Gi1/2/11 Gi1/2/12

Switch(config-if)#

That configures the eight thresholds for the first and only queue: threshold 1 at 65%, threshold 2 at 70%, and so on. Note the warning: for ingress queueing, existing cos markings have to be trusted. Also, remember that the 3560/3750 ingress and buffer allocation commands work switch-wide, because it has one ASIC per switch. The X6748 line card on a 6500 has two ASIC, which for QoS are sub-divided in two sub-ASIC per twelve ports. Applying a command that changes the ASIC QoS allocations means that the command will automatically apply to the other twelve interfaces as well.

Next, egress queueing. First configuring buffer allocations, next the thresholds for the first queue, similar to the ingress queue.

Switch(config-if)#wrr-queue queue-limit 70 20 10

Switch(config-if)#wrr-queue threshold 1 65 70 75 80 85 90 95 100

Switch(config-if)#exit

Again something special here: the buffer allocation command ‘wrr-queue queue-limit’ needs only three values despite four queues. This is because queue 4, the priority queue, is a strict priority queue: any packet entering it will be serviced next. This means that if a lot of traffic ends up in the priority queue, it can end up clogging the other queues because these will not be serviced anymore. The only way to counter this is to tightly control what ends up in that queue.

On to the 10 GE line card. First ingress, this time with a buffer command because there are multiple queues on ingress.

Switch(config)#interface Te1/9/1

Switch(config-if)#rcv-queue queue-limit 40 20 20 0 0 0 20

Warning: rcv queue-limit will not be applied in hardware.

To modify rcv queue-limit in hardware, all of the interfaces below

must be put into ‘trust cos’ state:

Te1/9/1 Te1/9/2 Te1/9/3 Te1/9/4

Switch(config-if)#

Switch(config-if)#rcv-queue threshold 2 80 100

Switch(config-if)#rcv-queue threshold 3 90 100

HW-QOS: Rx high threshold 2 is fixed at 100 percent

Propagating threshold configuration to: Te1/9/1 Te1/9/2 Te1/9/3 Te1/9/4

Warning: rcv thresholds will not be applied in hardware.

To modify rcv thresholds in hardware, all of the interfaces below

must be put into ‘trust cos’ state:

Te1/9/1 Te1/9/2 Te1/9/3 Te1/9/4

Switch(config-if)#end

The 10 GE line card has one ASIC per eight ports, one sub-ASIC for QoS per four ports. It has two thresholds per queue. The rest isn’t any different from previous configurations.

Queue mappings

As mentioned already, internally the 6500 platform uses CoS to determine in which queue a packet (and thus flow) ends up, although some newer line cards can work with both CoS and DSCP. The mappings are again similar to previous configurations:

Switch(config)#interface Gi1/2/1

Switch(config-if)#rcv-queue cos-map 1 4 5

Propagating cos-map configuration to: Gi1/2/1 Gi1/2/2 Gi1/2/3 Gi1/2/4 Gi1/2/5 Gi1/2/6 Gi1/2/7 Gi1/2/8 Gi1/2/9 Gi1/2/10 Gi1/2/11 Gi1/2/12

Warning: rcv cosmap will not be applied in hardware.

To modify rcv cosmap in hardware, all of the interfaces below

must be put into ‘trust cos’ state:

Gi1/2/1 Gi1/2/2 Gi1/2/3 Gi1/2/4 Gi1/2/5 Gi1/2/6 Gi1/2/7 Gi1/2/8 Gi1/2/9 Gi1/2/10 Gi1/2/11 Gi1/2/12

Switch(config-if)#wrr-queue cos-map 2 3 6

Propagating cos-map configuration to: Gi1/2/1 Gi1/2/2 Gi1/2/3 Gi1/2/4 Gi1/2/5 Gi1/2/6 Gi1/2/7 Gi1/2/8 Gi1/2/9 Gi1/2/10 Gi1/2/11 Gi1/2/12

Switch(config-if)#priority-queue cos-map 1 1 5

Propagating cos-map configuration to: Gi1/2/1 Gi1/2/2 Gi1/2/3 Gi1/2/4 Gi1/2/5 Gi1/2/6 Gi1/2/7 Gi1/2/8 Gi1/2/9 Gi1/2/10 Gi1/2/11 Gi1/2/12

The following things are configured here: CoS 5 is mapped to ingress queue 1, threshold 4. Next, CoS 6 is mapped to egress queue 2, threshold 3. And the third command is the mapping of CoS 5 to the first (and only) priority queue, first threshold.

DSCP to CoS mapping

Mapping CoS to a queue is okay, but what if you’re using DSCP for marking? And what if you have access ports on the 6500? CoS is part of the 802.1q header. For this you can do a DSCP to CoS mapping. For example, to map DSCP EF to CoS 5 and DSCP AF41 to CoS 3:

Switch(config)#mls qos map dscp-cos 46 to 5

Switch(config)#mls qos map dscp-cos 34 to 3

Now packets incoming or remarked on ingress as DSCP EF will be treated as CoS 5 in the queueing.

Bandwidth sharing & random-detect.

There are no shaping commands on the 6500 platform, only sharing of bandwidth. Again, only three values are possible for four queues as the priority queue will just take the bandwidth it needs. You can use shared weights using the ‘wrr-queue bandwidth’ command, but it’s easier to add the ‘percent’ keyword and let it total 100 for a more clear configuration:

Switch(config-if)#wrr-queue bandwidth percent 80 10 10

90% for the first queue, 10% for the two others.

The 6500 platform also supports random early detection in hardware, a function borrowed from routers. It can be activated for a non-priority queue, for example the second queue:

Switch(config-if)#wrr-queue random-detect 2

The thresholds for RED can be modified using the ‘wrr-queue random-detect min-threshold’ and ‘wrr-queue random-detect max-threshold’ commands. They configure the thresholds (eight for the gigabit line card) with a minimum value at which RED starts to work, and a maximum value at which RED starts to drop all packets entering the queue.

Show command

So far I haven’t listed a ‘show’ command. This is because everything you need to know about a certain port is all gathered in one command: ‘show queueing interface’. It’s a command with a very long output, showing the queue buffers, thresholds and drops for both ingress and egress.

The DSCP to CoS mapping is switch-wide, so this is still a separate command:

Switch#show mls qos maps dscp-cos

Dscp-cos map: (dscp= d1d2)

d1:d2 0 1 2 3 4 5 6 7 8 9

——————————————————

0 : 00 00 00 00 00 00 00 00 01 01

1 : 01 01 01 01 01 01 02 02 02 02

2 : 02 02 02 02 03 03 03 03 03 03

3 : 03 03 04 04 03 04 04 04 04 04

4 : 05 05 05 05 05 05 05 05 06 06

5 : 06 06 06 06 06 06 07 07 07 07

6 : 07 07 07 07

Again, d1 is the first digit, d2 the second: for DSCP 46, d1 is 4, d2 is 6.